Bleckmann

Bleckmann provides logistics solutions for fashion and lifestyle brands with a strong focus on e-commerce. These logistic solutions consist of multi-modal transport, customs services, omni-channel distribution, warehousing and added value services. In collaboration with their partners, they have 25 warehouses in four different countries on three different continents. To track and supply logistics services in these areas the warehouses make use of various Warehouse Management Systems (= WMS).

Business Case

Bleckmann’s expanding business means more elaborate financial information for reporting purposes. The financial controllers are making reports in MS Excel to visualize the operational costs on site level. Providing these weekly reports, is a time-consuming activity. Bleckmann wishes to automate the reports and to give insights in the operational costs on a daily basis and on client and activity level instead of only site level. By automating the reports, the financial controllers will have more time to focus on analysis instead of reporting. It is aimed to achieve a 50% efficiency improvement by increasing the speed of reporting and eliminating risks of delays.

Challenges

- Cloud first

The strategy of the Business Intelligence department is “Cloud first” in which flexibility, scalability and zero management were decisive factors.

- Resilience for change

Bleckmann aspired to have a data platform that is resilient for change of:- Business requirements:

Today’s use case differs from the one that arises tomorrow, within a month or even a year. Business requirements cannot be fully predicted which explains why Bleckmann planned to implement a data platform that can deal with these changes and is built for future business need. - Data sources (f.e. WMS):

Data sources are constantly changing and when new sources are added for reporting purposes, the data platform needs to be able to scale up or down and needs to capture all changes within these data sources.

- Business requirements:

- Ambitious timeline

Bleckmann’s ambition was to integrate all the data from seven source systems, design and implement a data model for reporting and build this report concerning “operational costs KPI” in less than six months.

- DataOps

By applying DataOps Bleckmann would be able to secure quality releases and to be responsive to the continuously changing business needs of the industry.

- SAP data

Bleckmann’s finance department works with SAP, one of the leading ERP systems for logistics, which offers many functionalities and possibilities but is also known for its challenging data model. The latter became our biggest obstacle to overcome during the project. To tackle this, Bleckmann’s SAP partner was intensely included. They offered us the much-needed knowledge on the Bleckmann SAP set up and the data model.

Solution

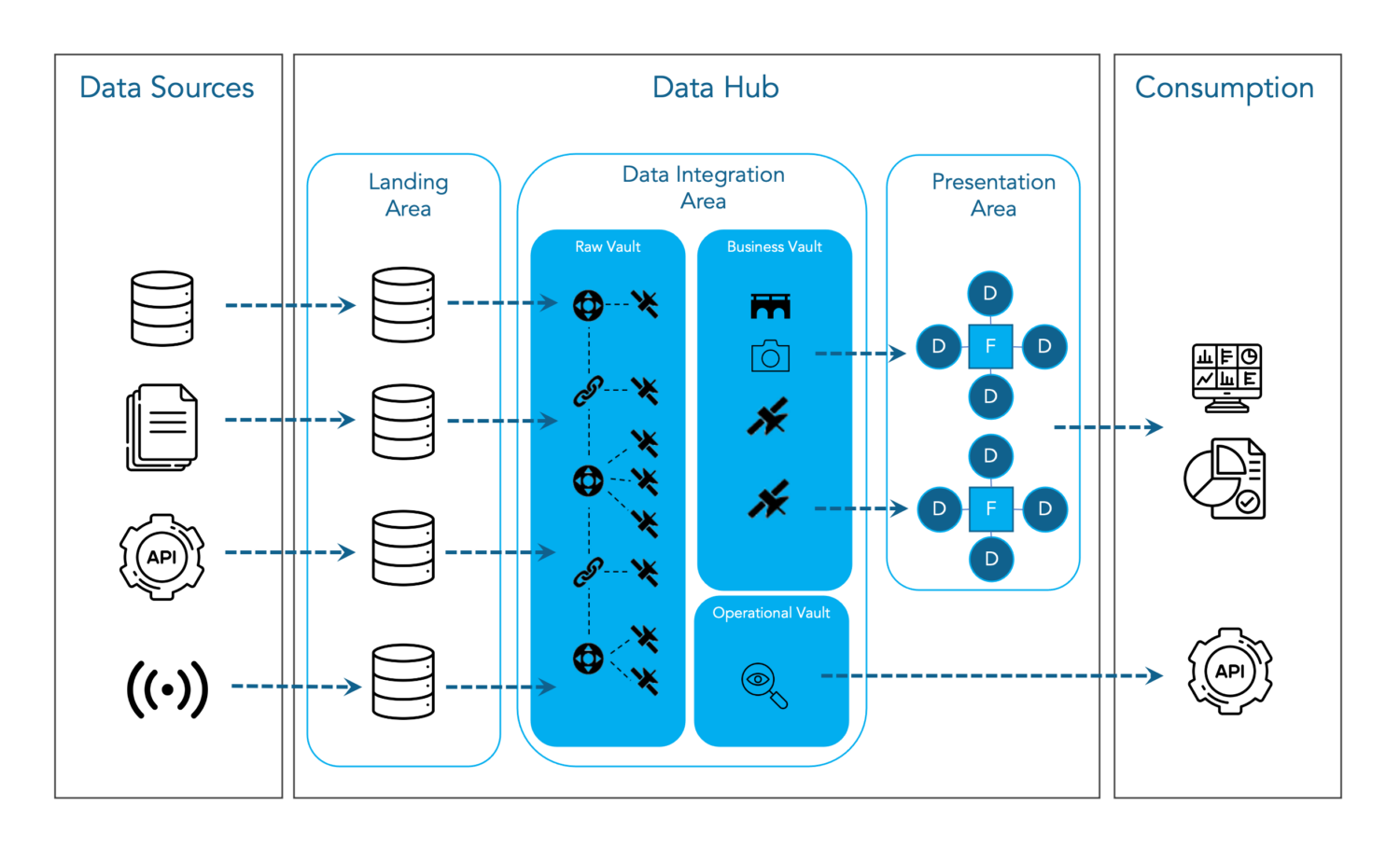

Together with the Business Intelligence team of Bleckmann, DataSense built a data platform where all different data sources can be integrated, now and in the future, and where all changes within these data sources will be stored. This future proof data platform allows Bleckmann to represent the source data at any given point in time and to scale in an agile way if new data sources need to be added.It can be used for reporting but also in the future for other applications via API’s. We call it a Data Hub.

But how did we tackle the challenges?

- Cloud first

After several encounters with DataSense and the people of Snowflake, Snowflake became the first choice over other vendors because of its unique architecture. Snowflake offers an unseen speed and flexibility they were already able to capture during a short trial period. Other reasons why Bleckmann chose Snowflake is because Snowflake is cloud independent and zero-management is needed

- Resilience for change

Instead of only integrating the data needed for the requested report we integrated all functional relevant data of the data sources in scope. This allows Bleckmann to answer to changing requirements and all changes within the data sources will be stored in the Data Hub.

Data Vault 2.0 is a methodology that allows you to integrate different data sources and also future changes within the structures/data models of these data sources

- Ambitious timeline

To answer the ambitious timeline, we use the Data Vault 2.0 automation tool Vaultspeed.

Vaultspeed is a SAAS tool that will generate the Data Vault 2.0 model and the ELT pipelines to load the Data Hub.

- DataOps

DataSense has built a DataOps framework in Python that enables automatically deploying Vaultspeed generated DDL and ELT jobs using Azure DevOps and Git.

- SAP data

The source analysis of SAP was time consuming, but we managed to do this together with the support of Bleckmann’s SAP provider.

Technology used

- Snowflake as cloud data platform

- Vaultspeed as Data Warehouse Automation tool

- Matillion as ETL tool

- Tableau as dashboarding tool