We’ve already established the synergy of dbt & Databricks. However, adding data warehouse automation to the mix might be the missing link in the story.

The combination of dbt & databricks provides a robust solution for streamlining data workflows and enhancing data lakehouse architectures. It leverages Databricks’ versatility in data processing and machine learning with dbt’s SQL based transformation and documentation capabilities.

VaultSpeed is a SaaS platform, covering data integration, transformation and modeling through a graphic user interface which is based on Data Vault 2.0 modeling. In case you need to refresh this modeling technique and architecture, check out our comparison of modeling techniques.

Lead by example

Throughout this post we’ll be using a simple example:

To elevate the DataSense onboarding and training process, HR needs some insights into the training and progress of their consultants/students.

By analyzing where the employees live and which knowledge they possess, they’ll be able to spread the skills as evenly as possible and fill in possible gaps step by step using two sources that contain coaching data and workforce data.

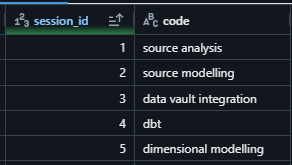

Source 1: COACHING

All data about which people followed which course at DataSense. This source contains three tables:

SESSION_ID

STUDENT_ID

STUDENT_SESSION_ID

These tables can be linked as follows:

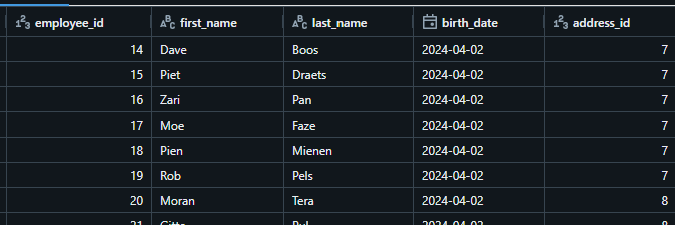

Source 2: WORKFORCE

All HR related data about employees, addresses, birthdates,..

This source contains two tables:

EMPLOYEE_ID

ADDRESS_ID

These tables can be linked as follows:

Important remark:

The EMPLOYEE_ID and STUDENT_ID don’t match, so we will have to integrate them in this case by using a business key (in this simplified example by using the first and last name of the person as the business key)

Use case problem

HR would like an overview of the subjects in Data Engineering that are less known by their employees in a certain province. That way they can spread the knowledge and narrow the gaps between their employees.

Secondly, they would like to observe the progress over time as gaps are filled step by step. This means historization of the data is crucial.

Solution

Fast integration of the data to deliver business value as soon as possible, while keeping track of the history. Writing this code manually in dbt efficiently could take you a while. So, we advise adding automation that works seamlessly with your current data stack.

VaultSpeed as the cherry on top

Adding automation to the solution will eliminate the risk of human error and give your team more time to focus on delivering clear business value.

The idea is to have a fast data integration and automatically generated code for dbt in a Databricks environment where all sources will be integrated in the silver layer.

The GUI of VaultSpeed will guide the end user through the flow. This way the amount of manual code and so the risk of human error is dramatically reduced. Once that generated code is deployed, it is added to the GIT repository of Databricks in dbt and thus part of the existing Databricks-dbt ecosystem. This gives your team more time to focus on the business concepts in the Gold layer.

The Gold layer is built by using facts and dimensions based on business concepts which will provide HR with the much-needed answer to its problem. Since this will be added to the dbt repository, it will automatically mean that it is part of the same data lineage in dbt.

Step by step set up

The VaultSpeed Cloud application (GUI) might be user friendly, but we’ll provide a short manual anyhow.

It all begins with tuning the tool by configuring parameters on different levels (System, Project, Data Vault, Source, …), to match it as closely as possible to what you are trying to achieve. Most of the parameters are added based on VaultSpeed’s hands on project experience.

For example: Case sensitivity in data, turning on/off testing automation like Referential Integrity or Data Quality, defining which change tracking loading strategy will be used, …

The source metadata is loaded into VaultSpeed and can be tuned in the source editor:

In case of missing relationships, they can be added in this step. Additionally, business keys can be chosen based upon constraints or by manually setting them.

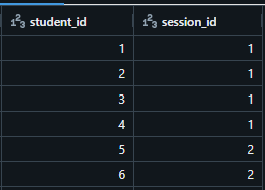

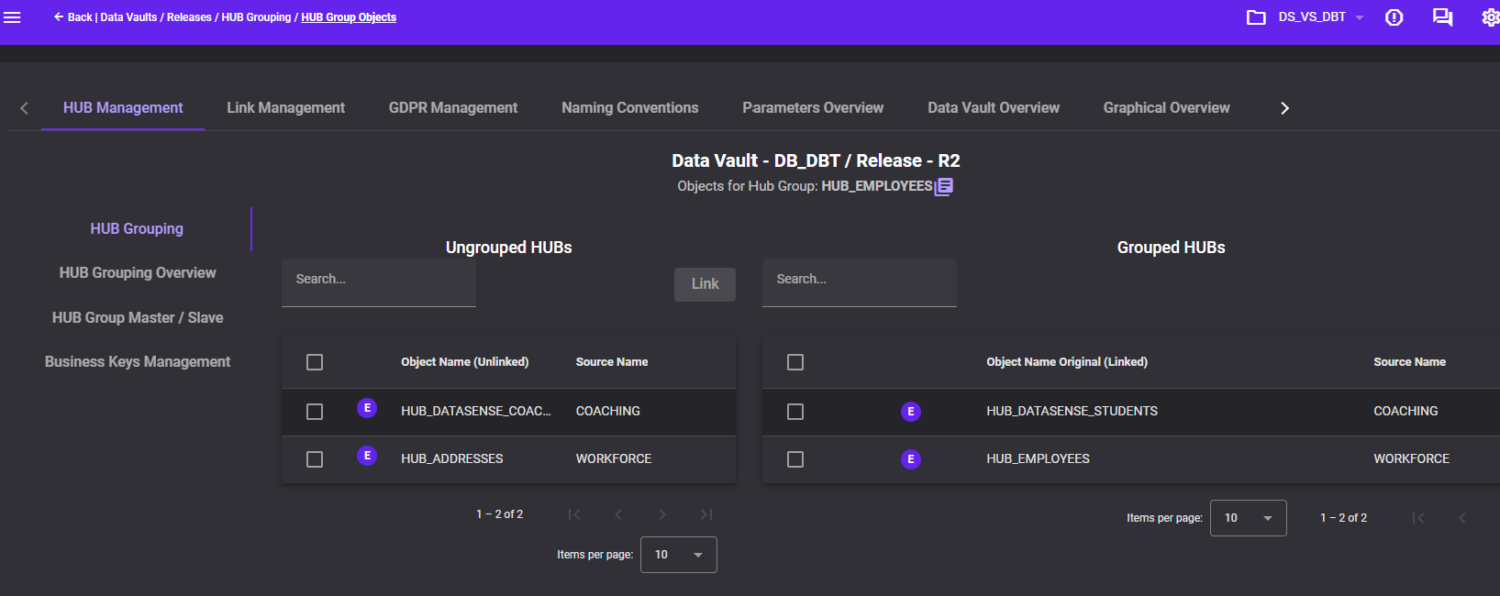

After configuring the sources, the Data Vault area will allow the user to combine the objects from different sources, which in turn represent the same business concepts. In this case, employees – students:

For our example the DATASENSE_STUDENTS and DATASENSE_EMPLOYEES are grouped as one business concept as both contain employees of DataSense. This will make it easier to link the sessions to the addresses of employees.

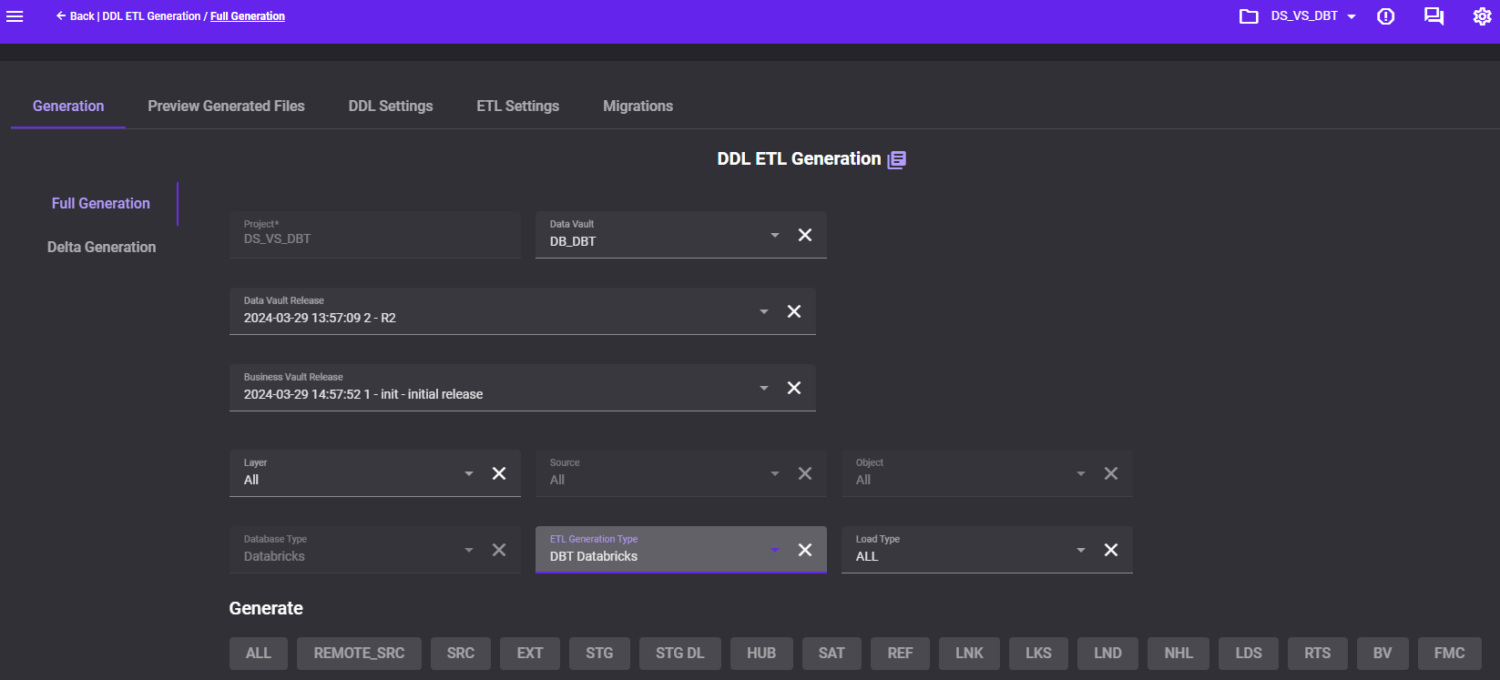

Once the integration is configured, code will be generated for Databricks-dbt.

This step will generate a zip file with all models, YML config files and macros necessary to integrate the source in Databricks.

VaultSpeed will allow you to add the code to your existing dbt repository in GIT. The DDL (delivered in the zip file) will be deployed separately in Databricks.

Adding business value through dbt

After checking out the repository in dbt, we can immediately run the dbt code to fill the integration layer with data (assuming the source tables from the other database are already available in the Bronze layer in Databricks).

To ensure everything runs smoothly, we perform additional dbt testing, such as counts, to confirm all source data is properly loaded into the Data Warehouse. Within DataSense, we’ve developed a specific framework for testing these counts on top of VaultSpeed generated code. We plan to demonstrate the value of these tests through a case study soon.

Once the Data Vault is loaded correctly, we’re ready to use those objects to build the gold layer (Dimensions and Facts) in dbt. For more information, see our comparison of modeling techniques.

With the silver layer automated, we can focus on combining historized data to answer business questions.

In this case we have the concept of addresses and the concept of the knowledge areas (sessions) which will be built as a dimension. The measures will be stored in a fact linking these dimensions.

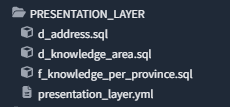

Dimensions:

- D_ADDRESS: addresses and a hierarchy on different aggregation levels (street – city – province – country)

- D_KNOWLEDGE_AREA: different possible knowledge areas

Facts:

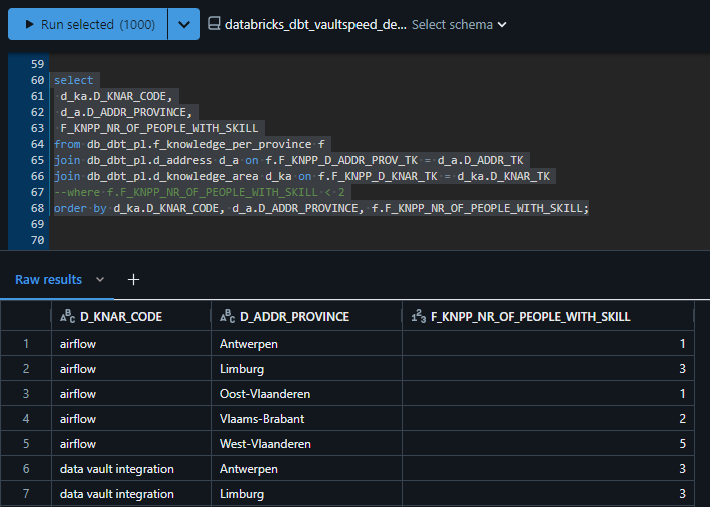

- F_KNOWLEDGE_PER_PROVINCE: gives us the measures about knowledge per province.

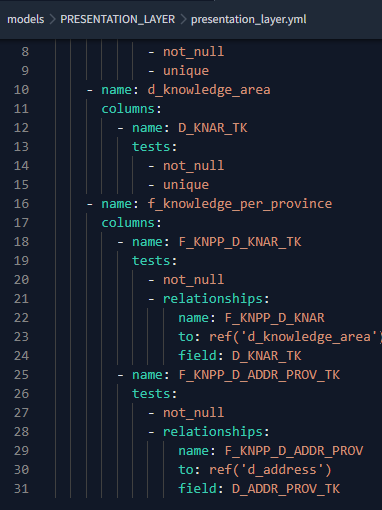

The SQL written in dbt is based on the analysis of what is needed. Since this is done manually, dbt Testing is added to the models to assure all technical keys of the FACT tables with a reference to the DIM, actually exist in the dimension.

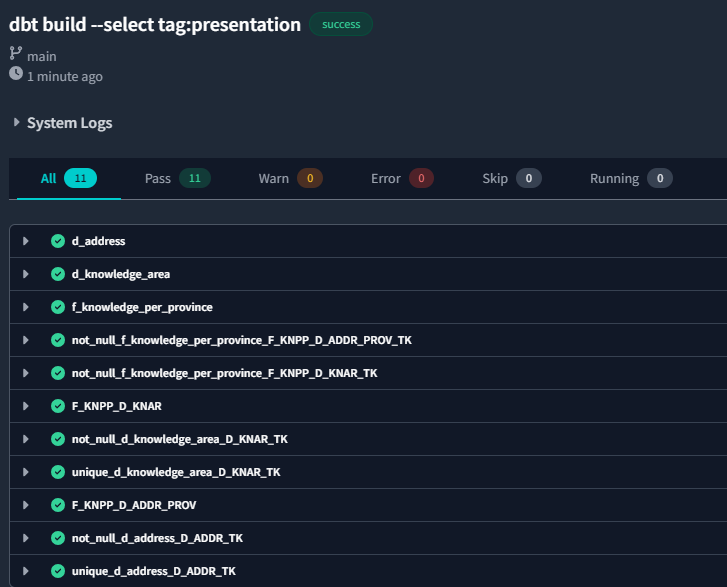

We’ve arrived at the last steps of the process, running the Gold layer. The models for this layer are run and dbt automatically knows the correct order in which the code should be executed if there are any dependencies. All tests will be executed in parallel.

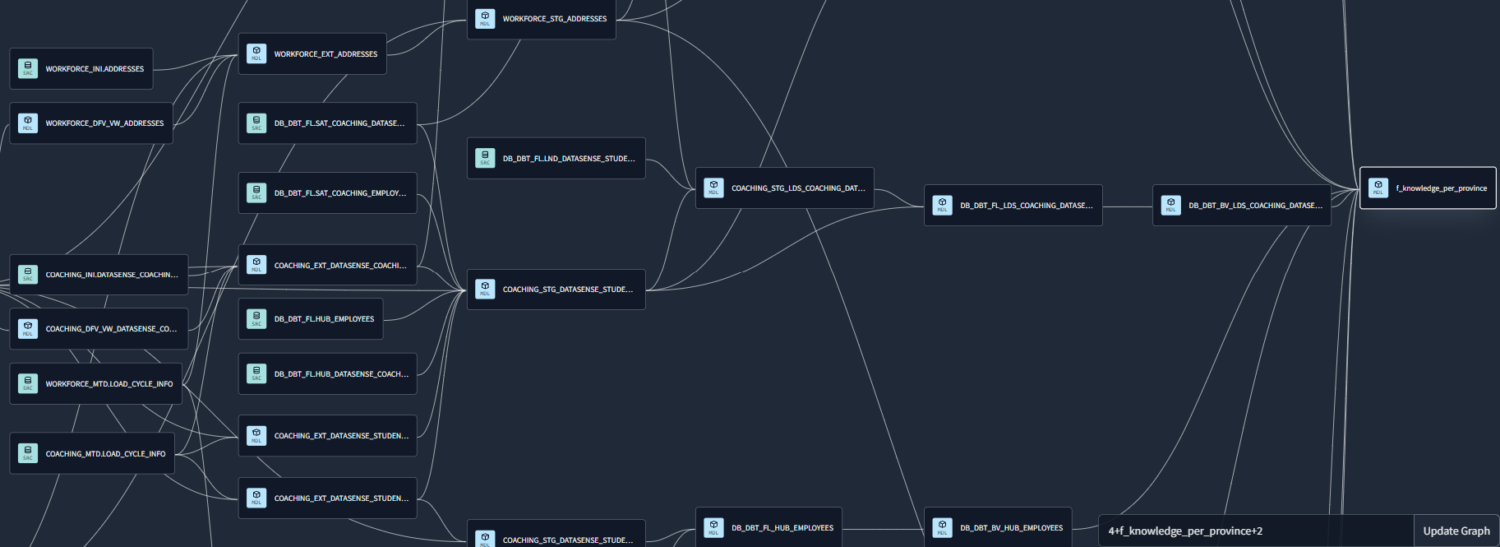

As mentioned in the introduction, when all this code is added to dbt, it is part of the Databricks ecosystem and the data lineage.

This can be a full data lineage, from Bronze to Gold:

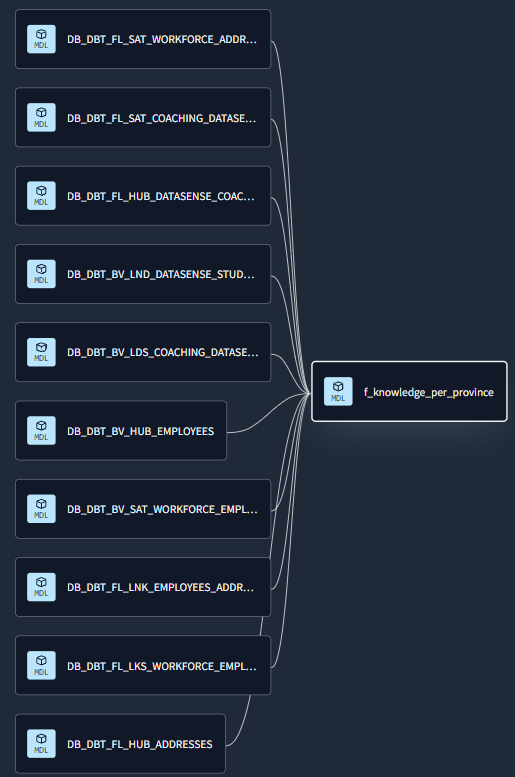

The data lineage can also be a subset of the lineage:

The one-million-dollar question is of course if we were able to get the answer HR asked for. Combining the fact and dimensions on top of the integrated Data Vault in a Databricks query gave us a correct and verified (tested) overview of the knowledge of the DataSense employees and which provinces are lacking knowledge on some topics.

Conclusion

In essence, combining dbt with Databricks has already offered a solid solution for optimizing data workflows and bolstering data lakehouse architectures. Incorporating VaultSpeed into this mix will expedite our results without necessitating excessive attention to integration details or manual procedure development. Instead, developers can concentrate on delivering business value in the Gold layer while maintaining all code within a single ecosystem.

By integrating dbt tests into the equation, we create a comprehensive package that instills confidence in the pipeline’s outcomes.