Notebooks are widely used in data science and machine learning to develop code and present the results. Databricks notebooks facilitate real-time collaboration with colleagues, creating data science and machine learning workflows in multiple languages with built-in data visualizations.

The various possibilities that Databricks notebooks can be used for:

Developing code using Python, SQL, R, and Scala. The different languages can be combined in one notebook. You can trigger commands in cells that are written in another language with the % sign: %python or %SQL.

It’s also possible to use autocomplete and automatic formatting for Python and SQL.

Run other notebooks from a notebook, use %run or dbutils.notebook.run().

Environments can be customized with libraries in notebooks and jobs. Java, Scala, and Python libraries can be uploaded.

Create and manage (scheduled) jobs to automatically run tasks directly from the notebook UI.

Results can be exported in two different formats: .html or .ipynb. This can be done in the notebook toolbar by selecting ‘File’ > ‘Export’ and selecting the export format.

Use Git integration with Databricks repos to store notebooks. It supports the following operations: clone a repository, commit and push, pull, branch management and visual comparison.

If you have notebooks associated with a Delta Live Tables pipeline, you can access the pipeline’s details, initiate a pipeline update, or remove the pipeline.

As stated above, Databricks notebooks facilitate real-time collaboration with colleagues. You can work together in a notebook, add comments in notebooks, and the notebooks can be shared.

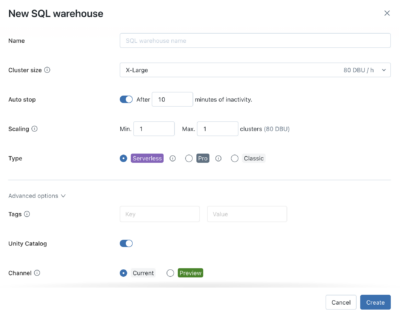

Use SQL warehouses to run Databricks notebooks

Databricks introduced SQL warehouses, these warehouses are the same powerful resources used for Databricks SQL, and they offer better performance for SQL tasks compared to general-purpose clusters. When you’re connected to a SQL warehouse, only the SQL commands in your notebook will run. Any code in other languages like Python or Scala won’t be executed. However, your Markdown cells will still display as usual.

In essence, this update allows you to run SQL operations more efficiently in Databricks Notebooks using dedicated SQL warehouses, improving performance, and enhancing the experience for SQL users.

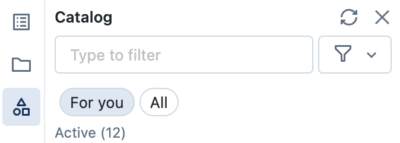

With the new unified schema browser, you can easily see all the available data in the Unity Catalog metastore without leaving the notebook or SQL editor.

You can even filter the list to display only the tables relevant to your current notebook by selecting “For you”. Plus, as you type your search, the display automatically updates to show items that match your search text.

In short, this feature allows you to seamlessly view and filter data from various sources within a single interface, making data exploration more efficient.

Share notebooks with others using Delta Sharing within Databricks

Delta Sharing offers both convenience and security. Sharing notebooks allows for collaborative work across different metastores and accounts, making it easier for people to collaborate and make the most of shared data using notebooks.

In a nutshell, Delta Sharing in Databricks enables secure and collaborative sharing of notebook files, enhancing data collaboration across different environments.

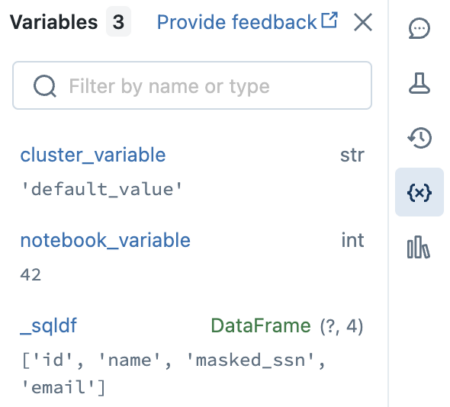

Use the Variable Explorer in notebooks

In summary, the Variable Explorer simplifies debugging in your Databricks Notebooks by providing insights into Python, Scala, and R variables and enabling breakpoint-based debugging. For Python on Databricks Runtime 12.1 and above, the variables update as a cell runs. For Scala, R, and for Python on Databricks Runtime 12.0 and below, variables update after a cell finishes running.